Encoders

This page explains how to set up encoder operators for kdb Insights Enterprise pipelines using the Web Interface.

Encoding allows data to be converted into a format that can be passed to an external system either by writing the content to a static file or by streaming the data to a different system.

Tip

See APIs for more details

Both q and Python interfaces can be used to build pipelines programmatically. See the q and Python APIs for details.

The pipeline builder uses a drag-and-drop interface to link together operations within a pipeline. For details on how to wire together a transformation, see the building a pipeline guide.

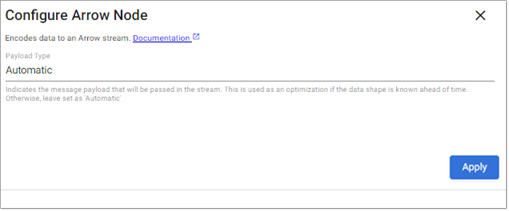

Arrow

(Beta Feature) The Arrow operator encodes kdb Arrow data.

Note

Beta - For evaluation and trial use only

This feature is currently in beta.

-

Refer here to the standard terms related to beta features

-

We invite you to use this beta feature and to provide feedback using the Ideas portal

-

During deployment, the entitlements feature is disabled by default, meaning no restrictions are applied and you can manage all databases, pipelines, and views as well as query all data in a kdb Insights Enterprise deployment

-

When you enable the feature, you do not have access to query data in a database unless you have been given a data entitlement to query the database in question

Note

q and Python APIs: .qsp.encode.arrow

Optional Parameters:

|

name |

description |

default |

|---|---|---|

|

Payload Type |

Indicates the message payload that is passed in the stream. This is used as an optimization if the data shape is known ahead of time. Otherwise, leave set as |

|

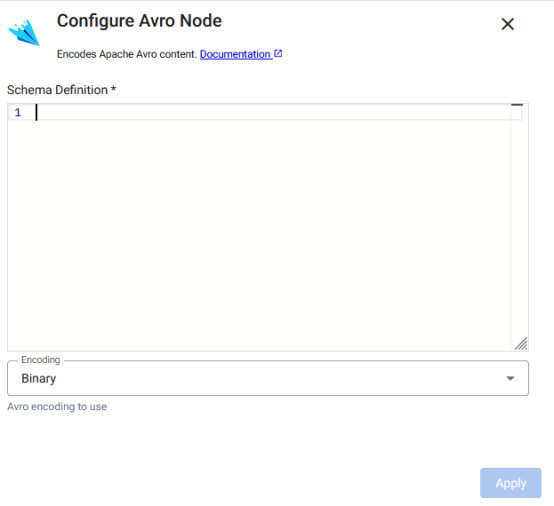

Avro

The Avro encoder converts kdb+ objects into Avro data messages.

You must provide an Avro schema object as an argument and optionally the desired message encoding.

Note

Beta - For evaluation and trial use only

This feature is currently in beta.

-

Refer here to the standard terms related to beta features

-

We invite you to use this beta feature and to provide feedback using the Ideas portal

-

During deployment, the entitlements feature is disabled by default, meaning no restrictions are applied and you can manage all databases, pipelines, and views as well as query all data in a kdb Insights Enterprise deployment

-

When you enable the feature, you do not have access to query data in a database unless you have been given a data entitlement to query the database in question

Note

q and Python APIs: .qsp.encode.avro

Required Parameters:

|

name |

description |

default |

|---|---|---|

|

Schema |

A schema definition indicating the Avro message format to be encoded. |

|

Optional Parameters:

|

name |

description |

default |

|---|---|---|

|

Encoding |

Indicates whether to encode data as Avro |

|

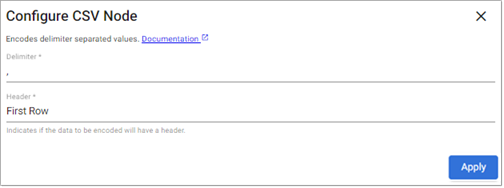

CSV

This operator converts data into CSV format.

Note

q and Python APIs: CSV

Required Parameters:

|

name |

description |

default |

|---|---|---|

|

Delimiter |

Field separator for the records in the encoded data |

,

|

Optional Parameters:

|

name |

description |

default |

|---|---|---|

|

Header |

Indicates whether encoded data should start with a header row. Options are |

|

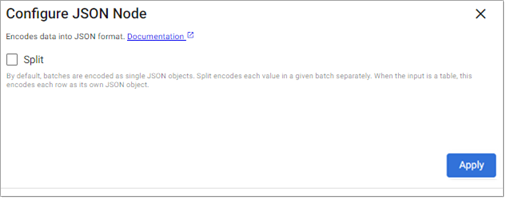

JSON

The JSON operator serializes data into JSON format.

Note

q and Python APIs: JSON

Optional Parameters:

|

name |

description |

default |

|---|---|---|

|

Split |

By default, batches are encoded as single JSON objects. Split encodes each value in a given batch separately. When the input is a table, this encodes each row as its own JSON object. |

No |

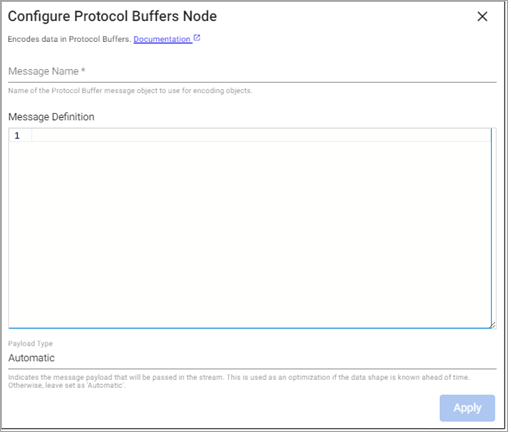

Protocol Buffers

The Protocol Buffers operator serializes data into Protocol Buffers.

Note

q and Python API: Protocol Buffer

Required Parameters:

|

name |

description |

default |

|---|---|---|

|

Message Name |

The name of the Protocol Buffer message type to decode |

|

|

Message Definition |

A |

|

Optional Parameters:

|

name |

description |

default |

|---|---|---|

|

Payload Type |

Indicates the message payload that will be passed in the stream. This is used as an optimization if the data shape is known ahead of time. Otherwise, leave set as |

|